MT Evaluation Procedures: Difference between revisions

| Line 69: | Line 69: | ||

<br /> | <br /> | ||

<br /> | <br /> | ||

[[File:Heval2.png]] | [[File:Heval2.png|center]] | ||

<br/> | <br/> | ||

Latest revision as of 11:09, 3 December 2021

Introduction

In this page we describe Pangeanic procedures for Machine Translation quality evaluation. The metrics are standardized by defining a mandatory step for the production pipeline, both in engines created by Pangeanic deep-learning team and by engines trained by customers when there is a requirement for Pangeanic to evaluate.

Evaluation step in the training pipeline

The pipeline for production of a new neural engine is outlined in the next chart.

The first steps are related to data, acquiring the training corpus, selecting it and preparing it for training. Typically several millions of alignments are finally ready to enter the training procedure but before the training starts a subset of the bilingual corpus is reserved for the evaluations.

Three evaluation steps are executed during the pipeline:

- Internal training driver, is an automated step executed after every training epoch to track the training progress and drive the evolution. This step is a core procedure in the machine learning training

- Automatic evaluation is executed after the training is over, and will generate the standard evaluation metrics and, as it is but a refinement of the internal training driver doesn’t trigger any validation/rework action.

- After the engine is finished and packaged in a docker pod to be able to be included in Pangeanic service ecosystem the human evaluation can be run. Human evaluation will generate metrics that might not reach the desirable thresholds causing the process to jump back to data selection step and repeat the main of the process.

Automated Evaluation

Pangeanic automated translation is performed calculating TER and BLEU metrics. The main objective of methods and tools for automated evaluation of MT is to compute numerical scores, which characterize the ‘quality’, or the level of performance of specific Machine Translation systems.

Automated MT evaluation scores are expected to agree (or correlate) with human intuitive judgments about certain aspects of translation quality, or with certain characteristics of usage scenarios for translated texts.

Automated evaluation tools are typically calibrated using more expensive, slow and intuitive process of human evaluation of MT output, where a group of human judges is asked to read either both the original text and the MT output, or just the MT-translated text, and to give their evaluation scores for certain quality parameters.

We use reference proximity techniques that replicate a scenario of comparing the target text to the original or to the gold-standard human reference, so better MT output is considered to be closer to the reference. In these methods the distance between an MT output and a human professional translation is computed automatically, for example as a “word error rate” (WER), which is a Levenshtein edit distance (the minimal number of insertions, deletions and substitutions needed to transform corresponding sentences into each other).

However, a standard edit distance (which was developed for the area of Automated Speech Recognition) is considered to be too simplistic for Machine Translation. The reason is that legitimate translation variants often involve differences in the order of words and phrases without major changes in meaning, and WER penalizes such re-orderings at the same level as using wrong words in one place and inserting redundant, or spurious words at another place.

A modification of the edit distance measure, which takes into account possible positional variation of continuous word sequence sis the Translation Error Rate (TER) metric, which is calculated as the number of “edits” divided by the average number of words in a reference. “Edits” cover insertions and deletions, as well as “shifts” – movement of continuous word sequences. TER can take into account several reference translations: the distance is calculated to one of such translations. An alternative way of measuring the distance between MT output and a reference translation is to calculate their overlap in terms of N-grams (individual words and continuous word sequences of different length, the length is usually between 1 and 4. This method is the basis of the most widely used family of metrics, such as BLEU.

Human Evaluation

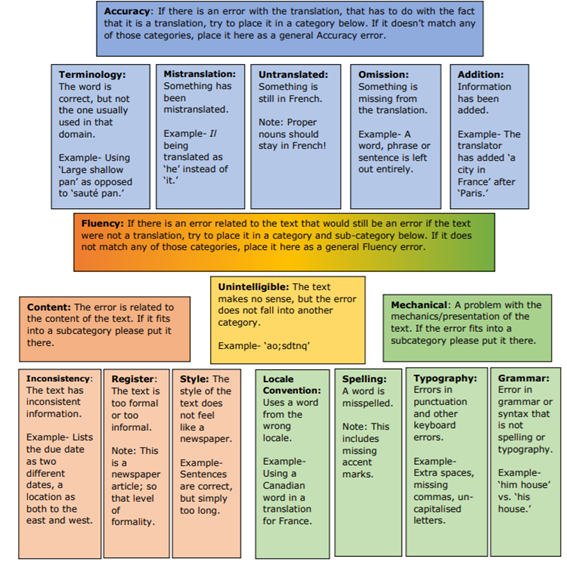

Pangeanic human evaluation is based on DFQ-MQM (Multidimensional Quality Metrics).

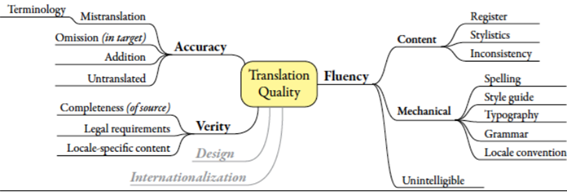

DQF-MQM error typology is a standard framework for defining translation quality metrics. It provides a comprehensive catalogue of quality error types, with standardized names and definitions and a mechanism for applying them to generate quality scores. In this case study, it was used to measure the types of errors appearing in the post-edited translation and their severity, and finally, to check them against the pre-defined desired quality threshold.

The Multidimensional Quality Metrics (MQM) system was developed in the European Union-funded QTLaunchPad project to address the shortcomings of previous quality evaluation. Unlike previous quality metric models, MQM was designed from the beginning to be flexible and to work with standards in order to integrate quality evaluation into the entire production cycle. It is aimed to avoid the problems of both a one-size-fits all model and the fragmentation of unlimited customization.

The central component of MQM is a hierarchical listing of issue types. The types were derived in a careful examination of existing quality evaluation metrics and the issues found by automatic quality checking tools. The issues were restricted to just those dealing with language and format.

The current version of the MQM hierarchy contains 114 issue types and represents a generalized superset of the issues found in existing metrics and tools. It is generalized in the sense that some tools go into considerable more detail in particular areas, such as Okapi CheckMate, which has eight separate whitespace related categories, all of which are generalized to MQM Whitespace; rather than try to capture all possible detail, some of the fine-grained details are abstracted away and if a particular application needs to use them, they can be implemented as custom MQM extensions. The issues types are defined in the MQM definition (http://qt21.eu/mqm-definition).

MTET tool

Pangeanic uses MTET (Machine Translation Evaluation Tool) to execute human evaluation step on created engines.

MTET is a web app for two actor profiles:

- the Project Manager, who creates an evaluation project, decides how to evaluate (extension and DFQ-MQM variation), assigns to a linguist and tracks the progress

- The linguist, who evaluates translations

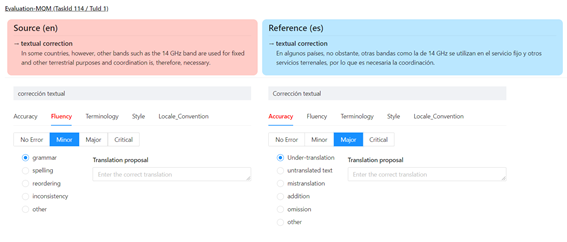

Evaluations are absolute when a score is calculated, 0-100, using a weight matrix for the typology and severity of found issues by the evaluator.

Evaluations can also be relative when evaluator is given two different translations for a source text and a golden-reference. Relative evaluations are the standard for Pangeanic engines where Google Translate output is used as alternative translation. The final metrics for any engine are the differential values for the different dimensions (accuracy, fluency,…) of Pangeanic output compared with Google Translate output.

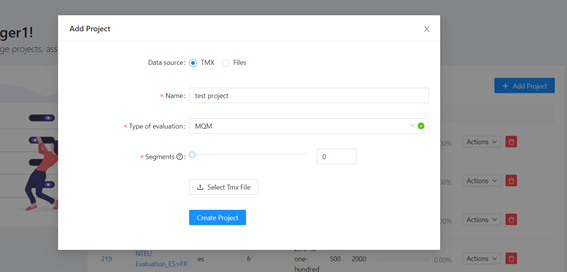

Project Managers usage of MTET is outlined in the next screenshots.

Creating a new project requires to upload the TMX containing source, reference, target, and google target:

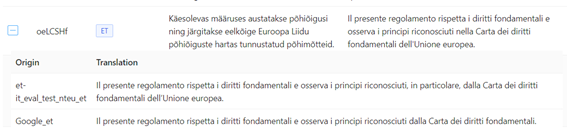

Every TU (translation Unit) to be evaluated consists of the 4 texts, for instance Estonian to Italian we have the Estonian source and the Italian reference on top and two translations to evaluate, Google’s and Pangeanic:

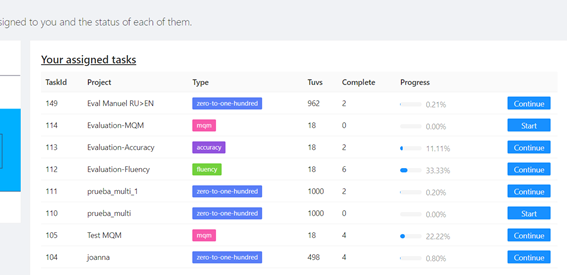

Linguist/evaluators are assigned tasks to complete:

And when evaluating they have a full MQM interface to report issues with their tipology and criticity:

Current metrics

The evaluation metrics for some Pangeanic engines are listed hereafter. The created engines are always scored 0-100 and compared to Google.

| Engine/Model | Pangeanic | Google Translate |

|---|---|---|

| English to Greek, generic domain, formal style | 92.45 | 86.60 |

| Dutch to Bulgarian, legal domain | 89.64 | 83.83 |

| English to French, Pharma industry | 83.63 | 67.09 |

| German to English, European Commission domain (Legal) | 63.12 | 58.10 |

| English to Spanish, European Commission domain (Legal) | 80.22 | 69.14 |