Online Learning. Online Trainer Module: Difference between revisions

No edit summary |

No edit summary |

||

| Line 58: | Line 58: | ||

<br> | <br> | ||

[[File:miror.png]] | [[File:miror.png]] | ||

Base Engines should never be re-trained unless there is a major update or a lot of | |||

new in-domain data. However, an organization can create several new engines | |||

from an initial Base Engine - all based on the existing Base Engine and all adapting | |||

differently to new project requirements (online training), | |||

If we think about translation engines in a big organization, we could have a | |||

generic Base Engine to translate from English to Dutch but after some time and | |||

projects, the need might arise to have different English to Dutch engines for | |||

domains like: | |||

* Legal (Mirror A) | |||

* Legal-Contracts (Mirror A.1) | |||

* Marketing (Mirror B) | |||

* Marketing-WEB-Services (Mirror B.1) | |||

* HR (Mirror C) | |||

And so on. | |||

The number of instance engines cloned directly or indirectly from a single base | |||

engine is 30 in the default licensing schema. | |||

The Online Trainer must keep track of all instances, be able to clone existing | |||

engines and catalogue them to allow differentiated access to users in the | |||

organization. | |||

There are some restrictions to notice: | |||

* new instances can only be created in the same machine where the original engine cloned is installed. | |||

* GPU instances share the assigned GPU in the host machine making concurrent use of original and clone not optimum. | |||

* The number of instance engines cloned directly or indirectly from a single base engine is 30 in the default licensing schema. | |||

Latest revision as of 09:11, 8 February 2022

Online Learning. Online Trainer Module

The Online Trainer Module is an optional feature allowing ongoing engine customization and refinement in the installed engines.

A common use in industry of natural language processing neural engines is providing initial hypotheses, which are later supervised and post-edited by a human expert. During this revision process, new training data is continuously generated, and the experts’ corrections are excellent material for the engine to learn from error, providing high-priority human-approved statistics. Engines can benefit from these new data, incrementally updating the underlying models under an online learning paradigm. Therefore, the systems are continuously adapting to a given domain or user.

The Online Trainer module implements two functionalities:

- Receive user corrected data and use it to incrementally improve the neural engine and

- Manage the different instances that evolve differently from a single base model.

The first functionality is achieved in collaboration of the Production Access Server

that receives the new data from users. After new data is received, the module will

store it and periodically send a re-train request to the engine with the new data.

Potentially, this can happen on-the-fly in some circumstances, although this

scenario is only required when using online learning together in professional

translation scenarios (CAT tools and human post-editors).

The data used to retrain has to be stored in order to allow future re-trains from scratch that might be needed if the neural engine is upgraded to a new architecture.

Engine re-training creates a new “mirror” instance for a particular application or

client which is saved and can be re-called at a later stage. The preferred

terminology of that particular application or client terms are stored and kept for the next use.

Engine Instances ("Mirrors" or "Flavors")

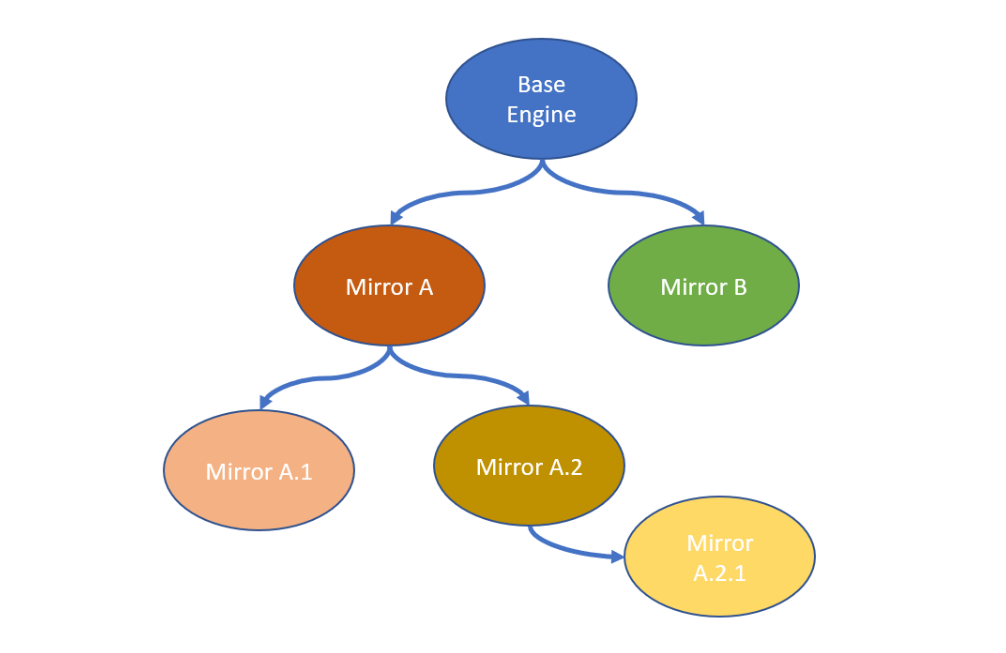

When an engine is installed, its initial language model defines de Base Engine. Before starting the re-train module, the Online Trainer module instantiates a new language model, actually creating a perfect copy of the base engine. The cloned engine starts retraining with the user corrected data and the language model starts diverging from the initial one. Let’s call the new engine “Mirror A”. The “Mirror A” engine learns the characteristics of the text that is being translated (post-editing) during the scope of a project or afterwards as feedback, let’s call it “Project A”.

New processing tasks belonging to Project A can be assigned to Mirror A engine with an expected improved performance. When a new project is started, the administrator can decide whether to use the initial Base Engine or the evolved (Mirror) Project-A engine. And in both cases, the administrator will have had to decide if the corrected data from the new Project can be used to improve the engine used or if a new instance has to be created, let’s call it Mirror A.1 or Mirror B (depending if the A engine or the Base engine is chosen).

The following diagram shows the general case:

Base Engines should never be re-trained unless there is a major update or a lot of new in-domain data. However, an organization can create several new engines from an initial Base Engine - all based on the existing Base Engine and all adapting differently to new project requirements (online training),

If we think about translation engines in a big organization, we could have a generic Base Engine to translate from English to Dutch but after some time and projects, the need might arise to have different English to Dutch engines for domains like:

- Legal (Mirror A)

- Legal-Contracts (Mirror A.1)

- Marketing (Mirror B)

- Marketing-WEB-Services (Mirror B.1)

- HR (Mirror C)

And so on.

The number of instance engines cloned directly or indirectly from a single base

engine is 30 in the default licensing schema.

The Online Trainer must keep track of all instances, be able to clone existing

engines and catalogue them to allow differentiated access to users in the

organization.

There are some restrictions to notice:

- new instances can only be created in the same machine where the original engine cloned is installed.

- GPU instances share the assigned GPU in the host machine making concurrent use of original and clone not optimum.

- The number of instance engines cloned directly or indirectly from a single base engine is 30 in the default licensing schema.