Tech Specifications. Deployment Scenario: Difference between revisions

No edit summary |

No edit summary |

||

| (One intermediate revision by the same user not shown) | |||

| Line 33: | Line 33: | ||

The next scenario is for a full deployment of the Solution on Amazon (AWS) cloud. | The next scenario is for a full deployment of the Solution on Amazon (AWS) cloud. | ||

[[File:.png]] | [[File:AWS.png]] | ||

The Main Server (a t2.xlarge instance) hosts PAS, DataBase and the modules for | |||

File Processing and Online Trainer. For high reliability the machine can be | |||

duplicated to be used as backup system. | |||

For high load the t2.xlarge instance can be replaced by a t2.x2large instance | |||

duplicating the available resources. | |||

A number of t2.xlarge machines are used (4 in this case), each hosting 4 CPU engines that can be run concurrently. These machines form the CPU engine farm. | |||

More engines can be deployed in each machine provided the use is normally non- | |||

concurrent. | |||

2 p2.xlarge instances (GPU instances with a graphic card each) form the GPU | |||

Engine Farm for high performance processes. A single engine is deployed in each | |||

machine to allow uninterrupted use of the neural engines. | |||

Notice that in this case the PAS is configured with a connection to Pangeanic SaaS, | |||

and that means that the system can answer to requests directed to engines other | |||

than the locally installed. | |||

Latest revision as of 09:17, 8 February 2022

Tech Specifications. Deployment Scenario.

In this section guidelines to setup a full solution are given, with some real examples.

All components have been tested and homologated to run on Ubuntu 16.04 TLS or higher version. GPU engines require Ubuntu 18 with CUDA drivers.

Hardware Requirements

To run the client components (PGB, PGWeb, CAT tools) standard client computers are enough provided they can install and run Java 8 (PGB), a modern Web Browser or the CAT Tool.

The rest of the components (Server modules) are usually installed in one central server (hosting PAS, DB and the optional File Processor and On-line Trainer) and one or more machines to host the engines.

The minimum configuration to run the central component (PAS + DataBase) is a 2 CPU, 4 GB Ram machine (equivalent to an AWS t2.medium instance).

When the File Processor or OnLine Trainer are installed in the same machine the minimum requirements are 2 CPU and 8GB (equivalent to AWS t2.large instance).

The recommended configurations are machines with 4 CPU and 16GB (t2.xlarge) or even 8 CPUs and 32Gb for high performance load (t2.x2large).

CPU engines require a CPU core per instance to run concurrently. The PAS will take into account the amount of CPU cores available at the machines hosting the engines to wake up or stop the engines as they are requested but the typical number of concurrent engines working has to be planed in advance to plan for the machine(s) hosting the engines.

Every CPU engine will require typically 4GB and a CPU core. To run 4 engines concurrently on the same box the required resources are then 16GB and 4 CPUs (a t2.xlarge AWS instance would do it).

Example scenario

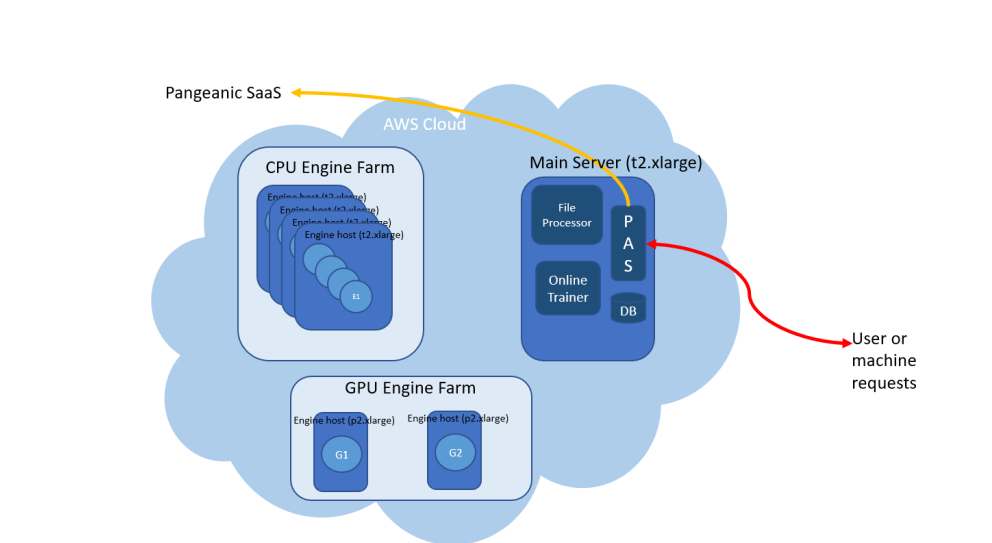

The next scenario is for a full deployment of the Solution on Amazon (AWS) cloud.

The Main Server (a t2.xlarge instance) hosts PAS, DataBase and the modules for File Processing and Online Trainer. For high reliability the machine can be duplicated to be used as backup system.

For high load the t2.xlarge instance can be replaced by a t2.x2large instance duplicating the available resources.

A number of t2.xlarge machines are used (4 in this case), each hosting 4 CPU engines that can be run concurrently. These machines form the CPU engine farm.

More engines can be deployed in each machine provided the use is normally non-

concurrent.

2 p2.xlarge instances (GPU instances with a graphic card each) form the GPU

Engine Farm for high performance processes. A single engine is deployed in each

machine to allow uninterrupted use of the neural engines.

Notice that in this case the PAS is configured with a connection to Pangeanic SaaS,

and that means that the system can answer to requests directed to engines other

than the locally installed.